Rasch Forum: 2006

Rasch Forum: 2007

Rasch Forum: 2008

Rasch Forum: 2009

Rasch Forum: 2010

Rasch Forum: 2011

Rasch Forum: 2012

Rasch Forum: 2013 January-June

Rasch Forum: 2014

Current Rasch Forum

Firoozi December 5th, 2013, 1:16pm: Conducting a study on the construct validity of Iranian national university entrance exam applying Rasch model, I’m on the fence about interpreting the results of the DIF output Table. Following Draba’s (1977) criterion, an item estimate difference > 0.5 logits, no DIF item is found. On the other hand, 10 out of 16 items flag for DIF in the “Prob” column (P<0.05). One point that may commit a Type 1 error is the large sample size of the study, 18,821 participants. For more clarification the DIF Table of the study is attached. I would appreciate your suggestion about what criterion to follow in reporting the DIF output of my study.

Mike.Linacre:

Thank you for asking, Firoozi.

In a DIF analysis, we need both size and significance:

Significance: the DIF effect must be improbable enough not to be only an accident. Your sample size is so large that a very small DIF effect is not an accident statistically.

Size: the DIF effect must be big enough to make a substantive difference to decisions based on the test. You must decide what "size" criterion to apply. For a test of 16 dichotomous items, every person ability measure has a standard error greater than 0.5 logits. (Please look at your output to confirm this). The size of the biggest DIF of 0.31 logits (ITEM 4) is smaller than the measurement error of the person measures. The average impact of that DIF on the person measures is DIF/(number of items) = 0.31/16 = 0.02 logits.

gleaner:

For a test of say for example 15 items measuring a common latent trait (e.g. listening, or anxiety). When only 4 of 15 items has DIF present effect, while in the other items, they have not.

Shall we reject the hypothsis that the ability calibrated in the test of 15 items has DIF effect?

Mike.Linacre:

Gleaner, lawyers for minority groups love to make a big fuss about DIF, but often, when we look at the overall effect of DIF on the person measures for the group we discover that those lawyers are "full of sound and fury, signifying nothing." (Shakespeare).

So, what is the effect of those 4 DIF items on the mean ability estimate of the focus group? The size of the overall DIF effect is (sum of 4 DIF effects)/15. On a test of 15 items, this is almost certainly much less than the S.E. of a person measure, so it would be undetectable for an individual. But it could be noticeable for a whole group. So let us compare this overall DIF size to the S.E. of the mean ability of the focus group. If the overall DIF effect is greater than 2*S.E (mean focus group), then we can say that the DIF is statistically significant for the group, even if it is not significant for an individual in the group.

Firoozi:

Thank for your helpful suggestion Dr. Linacre.

You mentioned according to the attached DIF Table, the standard error for each person measure is greater than the DIF contrast of the two classes. Now the problem is that the columns I see in the Table refer to the standard error of item measure not the person and their values are less than the DIF contrast. For example, the “DIF CONTRAST” value for ITEM 4 is 0.31 and its standard error for the second class is 0.02. I would be most grateful if you make this claim more clear to me.

Mike.Linacre:

Firoozi, please look at the person-measure Tables. For instance, in Winsteps Table 3.1, what is the average person-measure S.E.?

Now compare that average S.E. with the size of the item DIF ....

gleaner: Thanks a lot, Dr Linacre!

uve: Would it be Table 3.1 for all persons or Table 28.3 for the focus group in question?

Mike.Linacre: Uve, it would be surprising if the two Tables led us to different conclusions. However, since DIF is so political, choose the Table with the smaller average person-measure S.E.

Firoozi:

Dear Dr.Linacre,

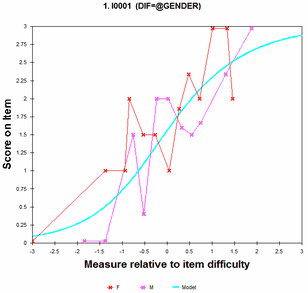

Thanks for your previous helpful guidelines on investigating DIF. Following Messick's (1989, 1995) six aspects of construct validity to provide evidence for (in)validity of the Iranian University Entrance Exam, I found different evidences in support of invalidity of the test. The attached person-item maps of different sections of the test can be regarded as a prima facie evidence for the invalidity of this high stake test. In addition, the mean square and standard fit indices of 6 out of 16 grammar items, 7 out of 14 vocabulary items, and 11 out of 20 reading items are beyond the acceptable value proposed by Wright and Linacre (1994). However, following Draba’s (1977) criterion, and applying your guidelines, none of the sixty items of the test show gender DIF. Now, the question is that how can we consider these DIF free items logical while no other evidences support the validity of the test. As clear from the attached Figure, the bulk of the items on the left are above the ability level of the majority of the persons on the right. Hence, can we regard the misfiting items as a justification for DIF free items?

Mike.Linacre:

Thank you for your question, Firoozi.

What type of items are these? Rating scales? Partial credit? Multiple choice? Cloze?

If these are multiple-choice questions, please verify that the multiple-choice scoring key is correctly applied.

Firoozi: The test is 6o multiple choice questions. I checked the answer key again and made sure that it was correctly applied. One point worth mentioning is that all the missing values is replaced by zero in the data. could DIF free items be as the result of this recoding?

Mike.Linacre:

Yes Firoozi. If "missing" means "skipped", then score as 0 for DIF, but if "missing" means "not reached", then score as "not administered" for DIF.

If this is MCQ, then many examinees are much below the guessing-level, and so the validity of the test is in doubt. Are they merely guessing or response-setting or other unwanted behavior?

gleaner:

So for MCQ, before entering responses for Rasch analysis, the categorization of missing values should be done very carefully, shall I understand this way, Dr Linacre?

However, it is not so easy to discriminate all the missing responses into skipped or not reached.

Probably, if test developers would like to put missing responses into the two categories, post-test interview might be necessary.

Sorry to put in this quesiton in this thread.

How about the missing responses in the other types of research methods, e.g. survey, rating scale? Do the missing responses need to be carefully categorized as well before entering data for analysis?

Thanks a lot!

Mike.Linacre:

Gleaner, the missing responses need to be categorized according to their reasons, and also the purposes of the analysis.

For instance, for measuring the persons, we may want to make all missing responses into wrong answers = 0.

But, for calibrating the items, we may only want to score a wrong answer when an examinee has thought about the question and decided not to answer = skipped.

We usually treat as "skipped" any missing response before the last answered question. All missing responses after the last answered question are "not reached" = "not administered".

So, a procedure is:

1) estimate the item difficulties: skipped=0, not reached = not administered

DIF studies are done here.

2) estimate the person measures for reporting: skipped =0, not reached=0, and the items are anchored (fixed) at their estimated difficulties from 1).

gleaner:

I see why it is a must for categorizing missing responses according to research purposes and reasons.

If necessary, for calibrating items, there is a possibility that some of the missing responses are simply not reached or administered, while some of them skipped for lack of time or beyond the test takers' proficiency. However, it is very likely that test takers would keep all the items answered by wild guesses, since no wrong responses are charged points off their score and the time is available for filling them all though not for careful consideration.

For the skipped and the not reached missing responses, if "We usually treat as "skipped" any missing response before the last answered question. All missing responses after the last answered question are "not reached" = "not administered"." and we can rule out the possibility that the test taker simply answer under the state of wild guess on the last answered item. So the exact situation is far more complex as you said. Right?

Mike.Linacre: Yes, Gleaner. An example is: https://www.rasch.org/rmt/rmt61e.htm

gleaner:

Thanks a lot, Dr Linacre, for teaching and help that you give in my learning!

Happy New Year to you and all the other Rasch pals in the forums!

gleaner December 27th, 2013, 2:49am:

Recent issue of RMT asks the question "Is now the time for a Rasch

measurement MOOC?"

Yes indeed. It is the optimal occasion for Rasch to go MOOC.

Some online courses on Rasch are available but are exclusive to some, not all people of all walks who are interested in Rasch. It is a pity indeed.

Mike.Linacre:

Yes, gleaner, Massive Open Online Courses for Rasch would be wonderful. The problem is that, though MOOC may be free to participants, they cost a lot of time, money and resources to construct and sometimes to operate.

www.winsteps.com/tutorials.htm is a step in that direction for Rasch.

gleaner:

Yes, MOOC courses cost a lot to create.

I watched some free video clips on Rasch you kindly created and uploaded ( probably so because the uploader ;D named by Linacre as well) online.

Thanks a lot, Dr Linacre, for your Rasch on the run and free tutorials on winsteps and facets!

Hope to see someone will create the first MOOC on Rasch soon. There are a lot on CTT illustrating with examples of R or SPSS.

Mike.Linacre: Yes, gleaner. Some Rasch functionality is available with many software packages, such as R, - see https://www.rasch.org/software.htm . It would be great if an R expert composed a tutorial in which a competent Rasch analysis of a meaningful dataset is conducted with R. One by-product would be a suite of R procedures with most of the functionality of Winsteps or RUMM.

gleaner:

R is gaining momentum in statistics.

Probably R will survive and thrive in the future if it is appreciated by the general public. So far Winsteps and Facets still reign when it comes to Rasch analysis. They are two of the most popular softwares.

gleaner December 22nd, 2013, 12:42pm:

New addition to Rasch literature is a book from SpringerLink

Boone, W. J., Staver, J. R., & Yale, M. S. (2013). Rasch analysis in the human sciences: Springer.

Rasch is fantastic and amazing. It does contribute to new insights into some old issues. Simple, qualitative as well as quantitative.

A great coursebook for Rasch newbies like me at least.

Practical and reader-friendly. Theory and practice combined. However, one critical point is missing. What can't Rasch do? Or what is the limitation of Rasch?

For sure in order to avoid the abuse of Rasch in research, the scope of Rasch needs to be defined.

It seems that no Rasch advocates tell the Rasch newbie about that.

How do you think about the issue, Dr Linacre? Thanks a lot!

Mike.Linacre:

Thank you for requesting my input, gleaner.

The scope of Rasch is easy to define:

If you want to construct a unidimensional variable from ordinal data, with the resulting estimates (person abilities, item difficulties) delineated in equal-interval (additive) units, then use Rasch.

Otherwise, don't.

The usual "otherwise" situation is that you want to describe (summarize) the data in a not-to-complex way. Person raw scores and item p-values are the most common simple summaries, i.e., Classical Test Theory (CTT). If CTT is deemed too simple, then Lord's Item Response Theory (IRT) models are the next increment in complexity of describing data. And, if you want yet greater descriptive complexity, data-mining methodology awaits.

OK, gleaner?

gleaner:

Thanks a lot for your prompt reply on this.

If every coursebook on statistics had some more information on what can't be done with the statistics learned, then that would be better.

Because some books on statistics appear to be preaching that hey boy, this is the best yet to be, there can't be better. But the reality is not the case.

There is an issue of appropriateness of adopting some statistics method and matching research question with the statistical tool.

Mike.Linacre: Indeed so, gleaner. Perhaps we statisticians (and software developers) have more in common with used-car salesmen than we would like to think .... :-(

gleaner:

Thanks a lot, Dr Linacre, for your insight into Rasch and statistics!

Frankly, I learn from the forum you kindly create and maintain, not only the beauty of Rasch but also that of statistics.

Thanks a lot, Dr Linacre!

Jessica December 19th, 2013, 1:25pm: Hi, everyone! I met a problem in analyzing my data. I found some misfitting persons in my data, I want to remove them first in order to decrease their influence in measure calibration before reinstating them. I wonder whether there is any criteria for deleting these persons? And should the criteria change with different sample sizes (for example, with a size of over 500, should the criteria be also less strict)? Which fit statistics should we use? Outfit Mnsq or its ZSTD? In some papers, I found some people used infit Mnsq 1.5, while others applied the same criteria (like 0.7 to 1.3) to person fit. Besides the experimental and painstaking way of removing misfitting persons one by one to compare the change in item measure hierarchy, are there any rules telling you when you can stop removing them? (like when all or almost all of the items have their outfit values within their reasonable range, then you can stop?)

gleaner:

Some lesson from Dear Dr Linacre! It is illuminating for me. I hope that it is too for you as well.

https://www.rasch.org/rmt/rmt234h.htm

Jessica: Thanks. Gleaner. That's very helpful. :)

Jessica December 19th, 2013, 2:19pm:

Hi, everyone, I have another question regarding setting critical item fit values for large sample size.

In Bond & Fox (2007), they mentioned the dilemma of using either fit Mnsq or their ZSTD with large sample size. In Liu (2010), he mentioned a study by Smith, Schumacker and Bush (1998) finding that when sample size was over 500, corrections to commonly used MNSQ fit statistics criteria (e.g, MNSQ to be within 0.7 to 1.3) should be made. I found the paper by Smith, Schumacker and Bush (1998), and in the end they mentioned that Wright (1996) suggests for a sample with a size over 500, the critical item infit Mnsq should be 1 + 2/Sqrt (x) and item outfit Mnsq should be 1 + 6/Sqrt (x), where x = the sample size. Should I apply this criteria in my study with a sample of around 540?

I found this criteria really strict afer applying it in my data: more more items are identified as misfit than applying the more commonly used fit range (0.7 to 1.3). But if I use the more lenient criteria, I am worried that some misfitting items couldn't be identified. McNamara (1996: p. 181) says the item fit range should be the mean fit value plus and minus twice the mean square statistic. I just couldn't decide which criteria I should use in my data which are all multiple choice questions. Can anybody help me?

Mike.Linacre:

Thank you for your questions, Jessica.

There are several aspects to what you have written.

1) Is it essential to your work to have data that fit the Rasch model perfectly?

The answer is "Generally not." As philosopher-of-science Larry Laudan writes: "Empirical problems are frequently solved because, for problem solving purposes, we do not require an exact, but only an approximate, resemblance between theoretical results and experimental ones."

2) How much misfit is acceptable? For this, we can use the "window-cleaning" or "onion-peeling" analogy. We can always get a cleaner window by cleaning it again, but we usually stop when the window is clean enough. So see, "When to stop removing ..." https://www.rasch.org/rmt/rmt234g.htm

3) The relationship between mean-squares and ZSTD or t-statistics is well understood. For practical purposes, significance tests lose their value for sample sizes of over 300. See the graph at https://www.rasch.org/rmt/rmt171n.htm

4) General guidance about mean-squares see "Reasonable Mean-Square fit values" - https://www.rasch.org/rmt/rmt83b.htm

Hope these help you, Jessica.

Jessica:

Dear Dr. Linacre,

Thank you so much for your help! Your suggestion is very enlightening and helpful! Now I can move on with my work with more confidence. :).

But I still have another question: if most of us are not concerned about perfect data-model fit (I guess), what is the value of reporting the item/person fit ZSTD (I saw some authors give fit ZSTD, but do not discuss them)? Since the conventional infit/outfit MNSQ provide sufficient information for us to identify the misfitting items, should we just ignore their fit ZSTDs?

Speaking of this, I can't help raising another question about item outfit. I saw some researchers only reported item infit MNSQ as criteria for removing misfitting items while ignoring their outfit MNSQ. And you also mentioned bigger item outfit is a less threat to the validity of the test and can be remedied. If I have to report each item and person measure accurately (as I still want to study the predictive validity of the test), does that mean I need to take away some misfitting people first and then reinstate them after I anchor the good values to the whole item/person sample (as you suggested)?

Mike.Linacre:

Jessica, please think about your audience and the reviewers of your work.

If your audience are familiar with conventional statistical analysis, then they will expect to see significance tests. However, there seems to be no point in reporting those tests (ZSTDs) if there is no discussion of them.

Infit and outfit fit statistics are unlikely to influence the predictive validity of a test. The usual indicator of predictive validity is the correlation between the person measures and an external (demographic?) variable. For instance, a grade-school arithmetic test is expected to have a high correlation between the ability measures of the students and their grade-levels.

We are usually more concerned about infit and outfit statistics when we are evaluating the construct validity of a test. Is it measuring what it is supposed to measure? For instance, on an arithmetic test, we expect the easiest items to be addition, then more difficult is subtraction, more difficult again is multiplication, and most difficult is division. If the arithmetic items do not follow that overall pattern, then the construct validity of the test is in doubt. For instance, if we notice a geography item among the multiplication items, then it does not matter how well it fits statistically, it should not be part of an arithmetic test.

Infit or outfit? If your audience is familiar with conventional statistics, then outfit is preferable because it is a converntional chi-square statistic divided by its d.f., Infit is an information-weighted chi-square (not used in conventional statistical analysis) and Infit is much more difficult to explain to an audience to whom Rasch analysis is new.

Misfitting persons: unless there is a huge proportion of hugely misfitting persons (such as an extremely misbehaving cohort guessing wildly on a multiple-choice test), the misfitting persons will have no effect on the findings you make about your test. They are like smudges on a window-pane. They have no real effect on the view though the window. However, if in doubt, analyze the data both ways. If the findings are substantively different, then that is a finding itself.

Here is a thought: in what way can you explain your own findings most clearly to yourself? Then that is the way to explain your findings to your audience.

Jessica:

Dear Dr. Linacre,

Thanks a lot for your prompt reply. Your explanation makes things much clearer to me. Your help is greatly appreciated. :)

oosta December 19th, 2013, 9:19pm: When I anchor some of my items, Winsteps says that I have two subsets. Table 13.1 (Item statistics) displays "Subset 1" next to each item. However, there are subsets only when I anchor some items. The two analyses and data are identical except for the presence/absence of the IAFILE statement.

oosta: Clarification: "Subset 1" is displayed next to each unanchored item. Nothing (related to subsets) is displayed next to the anchored items.

Mike.Linacre:

My apologies, Oosta. This is a known bug in Winsteps 3.80.1

Please email mike\~/winsteps.com to obtain a pre-release version of winsteps 3.80.2 which has this bug squashed.

Johnena December 19th, 2013, 3:05am:

dear all.

I am a new member here, thank you for holding such a warmful place.

I am still a student, and I am doing the research on child language acquisition test. the puzzle i had met is how to group the subjects into different age group? for example,3 years old, 4-years old and 5 years old.Meanwhile, the difference between the groups should also be significant, i like winsteps very much but i am still in the progress of studying the winsteps, I want to use winsteps to do this group-division, but i don't know how.

so ,could you kindly give me some suggestions? :)

sincerely

Johnena

Mike.Linacre:

Glad you can join us, Johnena.

1. Put an age code into the person label. For instance, age in years, or age in 6-month increments.

2. Run a Winsteps analysis.

3. If you have a smallish sample of students, then output a person map displaying the age code for each student: Winsteps Table 16, suing PMAP= to identify the age-code columns in the person label. If there is an age-related pattern, you should be able to see it.

4. Output sub-totals by person group using the age code. Winsteps Table 28.

Table 28.1 - https://www.winsteps.com/winman/index.htm?table28_1.htm - will tell you if there is a significant difference between pairs of age-codes.

acdwyer77 December 18th, 2013, 6:03pm:

I just analyzed an exam with a bunch of dichotomously scored MC questions using the Rasch model. I would have expected the PTMEAS and DISCRM columns in the item output to be highly correlated. The correlation I am finding between those columns is around .65, but I would have expected it to be 0.9 or higher. The correlation between MEASURE and classical item difficulty, for example, is much higher.

First, I would like to confirm my understanding that PTMEAS is the classical point biserial correlation for the item and that DISCRM is the IRT discrimination parameter if the 2PL model had been implemented. Is that correct?

If my understanding of those two statistics is correct, do you have a quick answer as to why this correlation is low (or a good reference)? Is it because discrimination estimates require larger sample sizes in order to be stable? The examinee sample size for these items ranges from 600 to 1100, fyi.

Thanks!

Mike.Linacre:

acdwyer77, PTMEAS is the point-measure correlation. The classical correlation is the point-biserial. There are two versions in Winsteps:

PTBIS=Y - the point-biserial excluding the correlated observation from the total score

PTBIS=Y - the point-biserial including the correlated observation in the total score

The point-biserial is highly influenced by outlying high and low observations.

DISCRIM is an approximation to the 2-PL IRT discrimination parameter. In Winsteps, it excludes outlying high and low observations. These contribute to the upper and lower asymptotes.

PTMEAS and DISCRIM are expected to be more highly correlated when the outlying observations are trimmed using CUTLO= and CUTHI=

Student123 December 6th, 2013, 1:23pm:

I read this report: http://digibug.ugr.es/bitstream/10481/24229/1/Padilla_Benitez_Hidalgo_Sireci_NERA2011.pdf.

They used cognitive interviews with the following questions to follow up their DIF-analysis:

Tell me what “broad science topics” etc. are for you in school.

When you responded to the first statement which said “I generally have fun when I

am learning broad science topics”, what situations were you thinking of? (places, times, etc.)

Also, statement b) says “I like reading about broad science”. What situations were

you thinking of when responding?

Tell me examples of “broad science problems” you have thought about when

responding to the statement “I am happy doing broad science problems.”

In the phrase "I am interested in learning about broad science ", you have answered

_______ (See and read the alternative marked by the participant in statement e), explain your answer, why did you answer that.

What have you understood for “I am interested”, in the sentence “I am interested in learning about broad science”?

Your reply has been ____ (See and read again the alternative marked in statement

e) In this sentence, what would your "interest in learning about broad science" be? (what would you do, think, etc..) so that your answer would be ____ (read the alternative furthest from that marked by the participant).

A significant ANOVA tells very little about the respondents thoughts about the items or what goes through their minds when they answer a certain instrument. Shouldn't every Rasch study include qualitative interviews (when resources allow it)? Is there any standard or routine procedure for how these interviews might be conducted in Rasch studies?

Mike.Linacre: Student123, yes, the situation you describe applies to all methods of quantitative statistical analysis. For instance, in the USA there is an on-going argument about the meaning of the unemployment percentages. We always need good qualitative information in order to understand what the numbers are telling us. There is no standard or routine procedure. There probably never will be a standard procedure, but hopefully combining quantitative and qualitative methodologies will one day become routine.

Student123:

Debate.

My impression of the general debate in Sweden is that statistics about unemployment and sick leave benefits are seen as inaccurate.

Some argue that people are excluded by being caught in welfare systems and that there is a need to give people opportunity to participate in the labor market again. Therefore the percentages about sick leave benefits does not say much about the average health in society.

Others argue that many who are ill does not get the sick leave benefits they should, insted they are included in employment agency programs along with the unemployed. Programs which in the statistics are recorded as "new job-opportunities". The employment percentages are then seen as misleading.

The problem seems to be that populations "unemployed", "new job-opportunities", sicklisted etc. are poorly defined based on political standpoints.

Interviewing.

"The cognitive interviewing approach to evaluating sources of response error in survey questionnaires was developed during the 1980's through an interdisciplinary effort by survey methodologists and psychologists." (Willis, 1994. p. 1).

"The cognitive approach to the design of questionnaires has generated a body of methodological research... Several Federal statistical agencies, as well as some private survey research organizations, including Research Triangle Institute (RTI), now routinely carry out cognitive interviewing activities on a wide variety of survey questionnaires." (ibid.).

mdilbone December 16th, 2013, 8:41pm:

Hi,

I need some advice with item fit interpretation for polytomous model.

The mean-square statistics for items are pretty good. Only one item has a fit over two and the others fall between .7-1.7. However, for some of these same items the z-standard fit stats are outrageously high and low. Ranging from 9 to -5.

What is this telling me?

I have not seen many authors report z-standard fit stats, only the mean-squares. What is the reason for this?

Many thanks!

Megan

Mike.Linacre:

Thank you for your questions, Megan.

Those numbers are telling us there is a large sample size. From the graph at https://www.winsteps.com/winman/index.htm?diagnosingmisfit.htm (a larger version of https://www.rasch.org/rmt/rmt171n.htm ) the sample size appears to be around 600.

Conventional statisticians, who only see numbers and not their meanings, have no idea what the size of a number (such as a mean-square) indicates. They can only answer the question "Is this number due to chance or not?" - i.e., a significance test.

Rasch analysis is somewhat different. We know the meanings of our numbers. So the question for us is "Is this number substantively important or not?"

We can apply the same logic to cleaning windows. Significance test: "Is this window clean or not?" Mean-square: "How dirty is this window? Is it dirty enough that we need to take action?" For most of us, a smudge or two on a window, so that the window is not "clean", is not enough to provoke us to take action.

suguess December 13th, 2013, 9:18am:

Dear Prof. Linacre,

Good morning! I have some questions about the FACETS specifications error F1 and I hope you could lend me a hand, thanks a lot!

What I want to specify is the task difficulty, and the data is 187 essays of 2 different prompts rated by 10 raters separately, i.e. 1 rater will rate almost 20 essays. But the software runs into error F1, and I have checked the user mannual but find no way out. Attached is my data specification. Your help is greatly appreciated.

Best regards,

Youshine

Mike.Linacre:

Thank you for your question, Youshine.

Look at this:

001,1,1,7.22

The rating is "7.22".

Ratings must be integers on a rating scale.

Where does the number 7.22 come from?

suguess:

Thanks for your prompt reply.

Actually the original rating scale allows decimals because the score of every essay is a weighted mean from three parts.

Does it mean that the software cannot analyze the data because of the decimal rating?

Thanks!

Mike.Linacre:

Youshine, Facets expects the ratings of the parts (integers) and the weights of the parts. Are those available?

If not, do you know what the weights of the parts were? We may be able to reconstruct the original ratings of the parts from the decimal ratings.

suguess:

Thanks for your help.

The original ratings and weighting of each part are available, but there are still such scores with demimals as 6.5 or 7.5. Will we be able to reconstruct the data?

Besides, I did not ask raters to do some multiple rating of each easay, so the data might not be good for Facets?

Mike.Linacre:

Youshine, 6.5 and 7.5 are no problem. We double the ratings (13, 15) and halve the weighting. Yes, we should be able to reconstruct the data usefully.

The design may not be the best for Facets, but Facets should provide valuable information.

suguess:

Thanks a lot for your help.

Then I will sort out the original data and ask for your advice later.

:)

bfarah December 14th, 2013, 3:02am:

Sorry Prof. Linacre,

I could not attach both files in 1 post, so here is the runfile

Thank you for your assistance

Farah Bahrouni

Mike.Linacre:

Thank you for your posts, Farah.

1. Facet 1 (examinees) is anchored, so it is constrained.

Only Facets 3 (L1) is not anchored, so please specify it as

Non-centered = 3

When this is done, the estimation converges after about 50 iterations.

2. Reliabilities. These have little meaning for anchored elements, because the anchor values are not obtained from these data. Notice the large "Displacements".

3. Orientation of facets. Please be sure that the elements in the facets are oriented in the direction you intend:

Positive = ability, easiness, leniency

Negative = inability, difficulty, severity

4. Please be sure that the anchor values for the elements are oriented in the same direction as the facet.

5. Separation and strata: see www.rasch.org/rmt/rmt163f.htm

bfarah:

Thank you for your prompt reply. I'll try your suggestions and let you know.

God bless you

Farah

bfarah:

Dear Prof. Linacre,

Thank you so much for your assistance.

Following your instructions, I have solved the iteration and the 'Warning 7' problems. On the other hand, I'm still struggling with table 7.3.1 (the L1 report), where the severe appears to be lenient and vice versa. You suggested to orient the anchored facets the same way as they are in the file commands, so I copied and pasted the anchored values, but it did not help. The orientation I have is not different from what is in Facets manual.

Could you please show me how to do it. Please refer to the attached files in my earlier posts entitled 'Concerns'.

I appreciate your help

Thank you

Farah Bahrouni

Mike.Linacre:

Farah, if you want L1 oriented as "Ability", then

Positive = 1,3 ; Examinee and L1: higher score -> higher measure

bfarah:

Thank you so much.

The attached table shows what I got when I set facets 1, 3 to positive: it has just reversed the order the facets are displayed in the columns, but the values remained unchanged, so the problem has not been solved. Please advise

Thank you

Farah

Mike.Linacre:

Farah, this analysis is difficult to interpret because:

1) there are anchored elements

2) each L1 is specified to define its own rating scale

3) the data are not completely crossed

If we remove the anchoring, except for group-anchoring the 8 subsets of raters at 0, and have a common rating scale, then we see:

Anchored (Original) Unanchored

+---------------------------------------------------------------+-----------------

| Total Total Obsvd Fair(M)| | |Fair(M)| |

| Score Count Average Average|Measure | N L1 |Average|Measure |

|--------------------------------+--------+---------------------|-------+--------+

| 1145 240 4.77 5.00 | -0.49 | 3 Indian | 4.87 | -1.10 |

| 1188 240 4.95 4.78 | -0.98 | 1 Native | 4.95 | -1.30 |

| 1224 240 5.10 5.00 | -0.89 | 2 Arab | 5.10 | -1.64 |

| 499 96 5.20 5.40 | -0.95 | 4 Russian | 5.09 | -1.60 |

|--------------------------------+--------+---------------------|-------+--------+

Notice that in the unanchored analysis, the Fair Averages are close to the Observed Averages

bfarah December 14th, 2013, 2:59am:

Dear Professor Linacre,

I am investigating the effects of rater L1 and experiential backgrounds on their writing assessment behavior. Experience is restricted to teaching the course the participating students are taking, as ESL/EFL teaching experience is a prerequisite criterion for being recruited at this place, so everybody is well experienced in that respect, therefore, taking it as a variable would be redundant.

For this, I had 68 raters from 4 different L1 backgrounds (20 Natives, 20 Arabs, 20 Indians, and 8 Russians) score analytically 3 essays written by 3 Omani (Arab) students on 4 categories: Task Achievement, Coherence & Cohesion, Vocabulary, and Grammar Accuracy using a 7-point scale for each category. I used FACETS to analyze the data for 5 facets: Examinees, Raters, L1, Experience, and Category. I attach herewith the Run file and the Output file. Could please look at them, check if there are errors in the Run file, and help me with the following questions, thanking you in advance for your assistance:

1. First, when I run the program, it does not not converge. It keeps on going until I press the stop button

2. I get this message at the top of Table 3: Warning (7)! Over-constrained? Noncenter= not in effect. I have non-centered facet 1, but it did help. I'm still receiving the same message.

3. Examinee Measurement Report: Is Reliability = 1 OK there?

4. Looking at Table 7.2.1 (Raters Measurement Report), I am concerned about the S.E. values. Don't you think they are rather high? If so, what is this due to? Do they affect the accuracy of the generated measures? I am concerned because I do not know how high S.E. should be to cause a serious threat to the estimation precision.

5. Moving to Table 7.3.1 (L1 Measurement Report): When we look at the measures we find that the Native group is the most severe, followed by the Indians, then come the Arabs, while the Russians are the most lenient . The most severe group should have the least total score (column 1) and their observed average (column 3) should be less than the expected average (column 4). It seems to me that the Native group and the Indian group are reversed. I also notice that the Observed Average for the Russians, the most lenient group, is less than the Fair Average, which to my understanding means they are severe, not lenient. I could not understand what is going on there. Please advise

6. Table 7.4.1 (Experience Measurement Report): My concern here is related to the Reliability and the Separation indices: Reliability = 0, Separation index = 0. Is this normal? Can this happen? Otherwise, please advise on what is wrong, and how to rectify that.

7. In the case of Experience, what does Reliability refer to? I mean does it refer to the measures generated by FACETS analysis, or to the ratings of the Experienced and Novice rater groups? In case the answer is the 2nd, how can we explain the fact that all facets (same raters, same ratings) are reliable except for Experience?

8. What is the difference between Separation index and Strata index? How is the latter interpreted?

I apologize for this rather long message; I just wanted to make myself as clear as possible

Thank you for your assistance

Best regards

Farah Bahrouni

Elina December 13th, 2013, 8:37am:

Hello Mike

I've got two questions this time. This is a very small data: 21 items and 93 persons.

Q1: I got a warning "Data are ambiguously connected into 2 subsets.". In the subset 2 there's only one person (the only one having maximum score). Do I have a problem here?

Q2: Maximum score is 22, because of one partial credit item. However, in table 20.2 there are scores up to 42. Row 22 seems to be almost fine (CUM. FREQ. 93 and 100.0 but PERCENTILE 99). In the rows 23-42 measure still grows, S.E. varies, FREQ. is 0, CUM. FREQ. is 93 and 100.0 and PERCENTILE is finally 100. Oh why?

Elina

Mike.Linacre:

Elina:

Q1 & Q2: The problem is one partial-credit item, but the "Rating Scale" Model is specified.

In Winsteps, please be sure that ISGROUPS= is active (no ; ) and is correctly specified, something like:

ISGROUPS=DDDDDDDDDDDDDDDDDDDDP

In Winsteps output, there must be Table 3.2 and Table 3.3

Elina:

Thank you, problems disappeared. I had tried to use GROUPS = 0.

Elina

Mike.Linacre:

Elina,

GROUPS = 0 also works, but it was probably

; GROUPS = 0 which is a comment

; means "ignore whatever follows"

Elina: So embarrassed... I seem to have forgotten ; this time. Thanks!

aureliel December 12th, 2013, 1:48pm:

Dear all,

In our data, we think people from two different groups use the rating scale differently (category probability curves look differently for both groups). I would like to test whether these curves are statistically/significantly/truly different for both groups or not (a differential category functioning analysis instead of a dif analysis). Would anyone whether this is possible?

Kind regards,

Aurelie

Mike.Linacre: Aurelie, you could try a chi-squared test of a 2(groups)x(categories) cross-tabulation.

aureliel:

Dear Mike,

thank you for your reply. Yes, I have done a chi-square and category frequencies do differ between groups.

However, I thought that using Rasch analyses you could say more nuanced things about a rating scale, such as about the ordering and the 'difficulty' (treshold). Could you compare those aspects across groups as well?

Thanks for your advice!

Kind regards,

Aurelie

Mike.Linacre:

Aurelie, we can certainly make some descriptive comparisons based on thresholds. Usually we can make the same comparisons by looking at the category frequencies. We like to see a smooth distribution of frequencies. Sharp peaks or troughs in the frequency distribution can be problematic.

A "non-uniform DIF analysis" is an alternative to "differential category function". This can be seen by plotting the empirical ICCs for the item both groups.

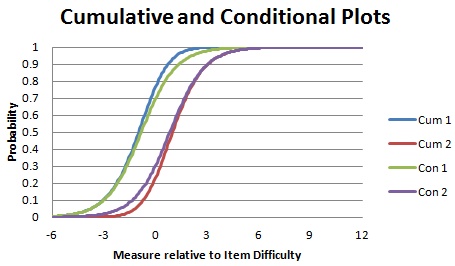

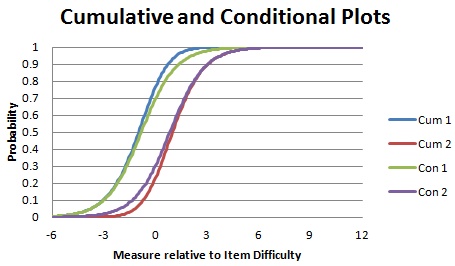

uve December 9th, 2013, 11:13pm:

Mike,

As I understand it, the conditional curves treat the categories as dichotomies, so the theta location where there is a 50/50 chance of being observed in that category or not.

As I understand it, the cumulative curves are the point of being observed in a category or any others below versus the category above.

However, whenever I switch between them, they both point to the same location on the scale. I thought perhaps there was something about my own data that was off and so used the Liking For Science data and it too did not change.

Perhaps I am not fully understanding the difference, but it would seem to me that these two different analyses should be giving very different information about the scale.

Mike.Linacre: Uve, please help me see the situation. In the analysis of Example0.txt, what changes to the control file have you made? What output Tables or Graphs are you looking at?

uve: I have made no changes to the control file. I just used Example0 as is. I then simply selected the Cumulative and Conditional options in the graph. Switching back and forth between them yields virtually no difference. I've done this with several other datasets and I always seem to get the same thing. I would rather use actual data for comparison, but the conditional probabilities are not included in the ISFILE output.

Mike.Linacre:

Uve, are we looking at the same Graphs? I flipped the Cumulative Graph, and then plotted it together with the Conditional Graph using Excel. Notice that the conditional curves are parallel dichotomous logistic ogives.

uve:

Thanks Mike. Yes, I was looking at the same thing. However, I was focusing only on the .5 probability point and did not notice any significant shift on the theta scale regardless of which datasets, including example0, I was using. Now that you have flipped the cumulative graphs, I see the difference and it seems to be primarily confined to points above and below the lines.

So if I am reading this correctly, from the conditional standpoint at roughly about zero on the scale, there is about an 80% probability of being observed at Neutral versus 20% not (Dislike).

At that same ability point from the cumulative perspective there is roughly a 90% of being observed at Dislike versus 10% in any category above.

Would that be close?

Mike.Linacre: Your eyesight is probably better than mine, Uve, but we can see the same thing by looking at the Category Probability Curves. "Conditional" compares adjacent curves. "Cumulative" compares all the curves, split high-low.

uve:

This is interesting and something I had not noticed before. It has always been my understanding that the Andrich Thresholds represented by where the adjacent category curves intersect mean equal probability, but that does not have to mean 50/50. It could be 30/30 or 60/60, etc. I think I have that right.

However, it appears that the conditional curves "convert" these points to 50/50. At least that's what my datasets seem to suggest. So I guess I'm a bit confused as to what is meant by "conditional".

Also, how would I interpret, say, .75 on the conditional curve?

Mike.Linacre:

Uve, "Conditional" means "conditional on looking only at the two adjacent categories". So .75 means: "the higher category is 3 times as probable as the lower category of the pair of categories".

uve: So would it be safe to say these are not similar to the Threshold Characteristic Curves found in RUMM?

Mike.Linacre:

Uve, please provide a link to an image of "Threshold Characteristic Curves".

The most widely published polytomous curves are the "Category Probability Curves" in which the Andrich Thresholds are at the intersections of the probability curves of adjacent categories. These Andrich Thresholds are the locations where the conditional curves cross the .5 probability line.

uve:

Virtually all of the TCC's I've seen have been in print. "Distractors with Information in Multiple Choice Items: A Rationale Based on the Rasch Model", Andrich & Styles, Criterion Referenced Testing, 2009 JAM Press is one example.

Mike.Linacre:

Thank you for the reference, Uve.

In the reference, these seem to be equivalent:

Fig. 1: Theoretical ICC = (Winsteps) Model ICC

(matching) Observed proportions = (Winsteps) Empirical ICC

Fig. 3: Category characteristic curves CCC = (Winsteps) Category probability curves

Fig. 5: Latent ICC = (Winsteps) Conditional ICC

(matching) Observed proportions = (Winsteps) not reported

Threshold probability curve = (Winsteps) Conditional ICC

Fig. 7a: Distractor proportions = (Winsteps) Empirical option curves

Fig. 7d: Threshold characteristic curve TCC = (Winsteps) Conditional ICC

Fig. 12: Person-item threshold distribution = (Winsteps) Table 1.7 Item Map with Andrich Thresholds

uve: Thanks for verifying!

ffwang December 7th, 2013, 10:41am:

Hi Mike,

I am using Facets to calibrate item parameters and ability scores. I learned in my class that we can code missing data in several ways, in my case, I used missing=999. As the test design I have is common item design with unique items on each form (2 forms I have), so part of the items on form 1 is not reached by examinees who take form 2, vice versa. So my question is, in Facets, how should I distinguish the coding of two different missing: one is missing in real sense, the other is not reached actually. Do I have to consider scoring them differently in terms of item or theta calibrations as I read somewhere in Winstep?

Thanks, Fan

Mike.Linacre:

Fan, you can use two different data codes in Facets: any values that are not valid responses. Missing (=skipped) and "Not reached" are coded differently when they are to be analyzed differently. For instance, on most multiple-choice tests, "skipped" are scored as "wrong".

The scoring of "not reached" depends on the purpose of the analysis. If we want good estimates of item difficulty, then "not reached" is treated as "not administered". If "not reached" is scored as "wrong", the the latter items on the test are estimated to be more difficult than they really are.

So, a good approach for speed tests is:

Analysis 1: "Not reached" are analyzed as not administered. Item difficulties are estimated.

Analysis 2: "Not reached" are analyzed as wrong. Items are anchored at their difficulties from analysis 1. Person abilities are estimated.

ffwang:

Hi Mike, thank you very much for the detailed explanation.

I wrote the following two codes to run the two separate analysis in order to get accurate item difficulty estimates and ability estimates. I have two types of missing data treating differently in terms of scoring. One is missing/skipped, scored as 0; the other one is missing/not administered, I guess those items will be scored as -1. First I used the following code to estimate item parameter, but it has been running the whole afternoon and is still running but didn't converge (not sure if this is the right word in this case). Can you help me take a look at my code to see if I did anything wrong? Thanks.

My codes for estimating item difficulty:

; item parameter calibrations

CODES=01S

NEWSCORE=010 ; S, skipped, is scored 0

MISSING-SCORED= -1 ; data code R is not in CODES= so it will be scored -1 = "ignore", "not administered"

IFILE=item-calibrations.txt

Output = SKTOUT1.txt

Title = AP Biology scientific knowledge for teaching Pilot items ;

Arrange = N

Facets = 2 ; four facets: examinees and items

Positive = 1 ; for facet 1, examinees, higher score = higher measure

Noncenter = 1 ; only facet 1, examinees, does not have mean measure set to zero

Pt-biserial = Yes ; report the point-biserial correlation

Vertical=1*, 2A ; show examinee by distribution, items by number and name

Model = ?,?,D ; elements of the two facets interact to produce dichotomous responses

Labels =

1,Examinees ; examinees are facet 1

1-196

*

2,items

1-63

*

Data=

1,1-63,0,1,1,1,1,1,1,0,1,1,0,1,0,1,0,1,1,1,1,1,1,1,1,1,1,0,1,0,0,1,0,0,1,0,1,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

2,1-63,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,1,1,1,1,1,1,1,1,S,S,S,S,S,S,S,S,S,S,S,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

3,1-63,0,0,1,1,0,0,1,0,1,1,1,1,0,1,1,1,1,1,1,1,1,1,1,1,1,0,1,0,0,1,1,1,1,1,1,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

4,1-63,0,1,1,0,0,0,1,1,1,1,1,1,1,1,0,1,1,1,1,1,1,1,1,1,1,0,1,0,0,1,1,1,0,0,1,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

5,1-63,0,1,0,0,0,0,0,0,0,0,1,0,0,1,0,1,1,1,1,1,1,1,1,1,1,0,0,1,0,0,0,1,1,1,1,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

6,1-63,0,0,1,0,1,1,1,0,1,1,1,1,0,0,0,1,1,1,1,1,1,1,1,1,1,0,1,1,0,0,0,0,0,0,1,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

7,1-63,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,1,1,1,1,1,1,1,0,S,S,S,S,S,S,S,S,S,S,S,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

8,1-63,0,0,1,1,0,0,1,0,1,1,1,1,0,1,1,1,1,1,1,1,1,1,1,1,1,1,0,1,0,1,0,0,1,1,1,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

9,1-63,0,1,0,1,1,1,1,0,0,1,0,1,0,1,0,0,1,1,1,1,1,1,1,1,1,1,1,0,0,0,0,0,0,0,0,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

10,1-63,1,0,1,1,0,0,1,0,1,1,0,1,1,1,0,0,1,1,1,1,1,1,1,1,1,1,0,0,0,1,0,0,0,0,0,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

11,1-63,0,0,1,1,1,0,1,0,1,1,1,1,1,1,0,1,1,1,1,1,1,1,1,1,0,1,1,0,0,1,1,1,0,1,0,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

12,1-63,0,0,1,0,0,0,1,1,0,1,0,1,0,1,1,1,1,1,1,1,1,1,1,1,1,0,1,0,1,1,1,1,1,1,1,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

13,1-63,1,1,1,1,1,0,1,0,1,1,1,1,0,1,1,1,1,1,1,1,1,1,1,1,1,0,1,0,0,1,0,0,1,1,0,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

14,1-63,0,1,1,0,1,0,1,0,1,1,1,1,0,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,0,0,1,1,0,1,1,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

..............................................

So for estimating ability, my code is as follows. But I didn't get a chance to run it because I do not have the accurate item difficulty parameter estimates.

; ability parameter calibrations

IAFILE=item-calibrations.txt

CODES=01SR

NEWSCORE=0100 ; S and R are scored 0

Output = SKTOUT2.txt

Title = AP Biology scientific knowledge for teaching Pilot items ;

Arrange = N

Facets = 2 ; four facets: examinees and items

Positive = 1 ; for facet 1, examinees, higher score = higher measure

Noncenter = 1 ; only facet 1, examinees, does not have mean measure set to zero

Pt-biserial = Yes ; report the point-biserial correlation

Vertical=1*, 2A ; show examinee by distribution, items by number and name

Model = ?,?,D ; elements of the two facets interact to produce dichotomous responses

Labels =

1,Examinees ; examinees are facet 1

1-196

*

2,items

1-63

*

Data=

1,1-63,0,1,1,1,1,1,1,0,1,1,0,1,0,1,0,1,1,1,1,1,1,1,1,1,1,0,1,0,0,1,0,0,1,0,1,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

2,1-63,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,1,1,1,1,1,1,1,1,S,S,S,S,S,S,S,S,S,S,S,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

3,1-63,0,0,1,1,0,0,1,0,1,1,1,1,0,1,1,1,1,1,1,1,1,1,1,1,1,0,1,0,0,1,1,1,1,1,1,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

4,1-63,0,1,1,0,0,0,1,1,1,1,1,1,1,1,0,1,1,1,1,1,1,1,1,1,1,0,1,0,0,1,1,1,0,0,1,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

5,1-63,0,1,0,0,0,0,0,0,0,0,1,0,0,1,0,1,1,1,1,1,1,1,1,1,1,0,0,1,0,0,0,1,1,1,1,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

6,1-63,0,0,1,0,1,1,1,0,1,1,1,1,0,0,0,1,1,1,1,1,1,1,1,1,1,0,1,1,0,0,0,0,0,0,1,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

7,1-63,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,1,1,1,1,1,1,1,0,S,S,S,S,S,S,S,S,S,S,S,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

8,1-63,0,0,1,1,0,0,1,0,1,1,1,1,0,1,1,1,1,1,1,1,1,1,1,1,1,1,0,1,0,1,0,0,1,1,1,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

9,1-63,0,1,0,1,1,1,1,0,0,1,0,1,0,1,0,0,1,1,1,1,1,1,1,1,1,1,1,0,0,0,0,0,0,0,0,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

10,1-63,1,0,1,1,0,0,1,0,1,1,0,1,1,1,0,0,1,1,1,1,1,1,1,1,1,1,0,0,0,1,0,0,0,0,0,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

11,1-63,0,0,1,1,1,0,1,0,1,1,1,1,1,1,0,1,1,1,1,1,1,1,1,1,0,1,1,0,0,1,1,1,0,1,0,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

12,1-63,0,0,1,0,0,0,1,1,0,1,0,1,0,1,1,1,1,1,1,1,1,1,1,1,1,0,1,0,1,1,1,1,1,1,1,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

13,1-63,1,1,1,1,1,0,1,0,1,1,1,1,0,1,1,1,1,1,1,1,1,1,1,1,1,0,1,0,0,1,0,0,1,1,0,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

14,1-63,0,1,1,0,1,0,1,0,1,1,1,1,0,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,0,0,1,1,0,1,1,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

15,1-63,0,0,0,0,0,0,1,0,0,1,1,1,0,1,1,0,1,1,1,1,1,1,1,1,1,0,0,1,0,1,0,0,0,1,0,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

16,1-63,0,1,1,1,0,0,1,0,1,1,0,1,1,1,0,1,1,1,1,1,1,1,1,1,1,0,1,1,1,1,1,0,0,0,1,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

.................................

Thank you very much. Fan

Mike.Linacre:

Fan, these are Winsteps instructions:

CODES=01S

NEWSCORE=010 ; S, skipped, is scored 0

MISSING-SCORED= -1 ; data code R is not in CODES= so it will be scored -1 = "ignore", "not administered"

and

IAFILE=item-calibrations.txt

CODES=01SR

NEWSCORE=0100 ; S and R are scored 0

For Facets,

response scoring: use Rating scale=

anchoring: use Labels= and ,A for the anchored facet.

ffwang:

Hi Mike,

Thank you for pointing this out. I used Winstep codes only because I couldn't find out how to code missing data in Facets manuals.

I think I am still confused about the right codes to use and I tried but found my codes might be problematic. Could you help me take a look? Thank you.

Analysis 1: estimating item parameters

; item parameter calibrations

Output = SKTOUT1.txt

Title = AP Biology scientific knowledge for teaching Pilot items ;

;Arrange = A

Facets = 2 ; two facets: examinees and items

Positive = 1 ; for facet 1, examinees, higher score = higher measure

Noncenter = 1 ; only facet 1, examinees, does not have mean measure set to zero

Pt-biserial = Yes ; report the point-biserial correlation

Vertical=1*,1A, 2A ; show examinee by distribution, label,items by label

Model = ?,?,D ; elements of the two facets interact to produce dichotomous responses

Rating scale=recoded, D ; a dichotomous scale called "recoded"

0=wrong,,,S ;S recoded to 0

-1=missing,,,R ; R recoded to be ignored

*

Labels =

1,Examinees ; examinees are facet 1

1-196

*

2,items

1-63

*

Data=

..............................................

Analysis 2: estimating ability parameter

; ability parameter calibrations

Output = SKTOUT2.txt

Title = AP Biology scientific knowledge for teaching Pilot items ;

;Arrange = A

Facets = 2 ; two facets: examinees and items

Positive = 1 ; for facet 1, examinees, higher score = higher measure

Noncenter = 1 ; only facet 1, examinees, does not have mean measure set to zero

Pt-biserial = Yes ; report the point-biserial correlation

Vertical=1*,1A, 2A ; show examinee by distribution, label,items by label

Model = ?,?,D ; elements of the two facets interact to produce dichotomous responses

Rating scale=recoded, D ; a dichotomous scale called "recoded"

0=wrong,,,S+R ;SR recoded to 0

;-1=missing,,,R ; R recoded to be ignored

*

Labels =

1,Examinees ; examinees are facet 1

1-196

*

2=items,A ; anchored facet

1-63

*

Data=

.......................................

Three problems:

1. I used "Rating scale=recoded, D", but the output gave me a warning "Rating (or other) scale = RECODED,D,General,Ordinal Warning (3)! This Rating (or partial credit) scale= is NOT referenced by a Model= specification" And it makes sense to me because I remembered I only use "rating scale=" for rating scale or partial credit model in Facets. So I guess I must did something wrong.

2. As you might or might not notice, I recoded not administered items differently in the two analyses, but the output are identical, which doesn't seem to be right to me.

3. For anchoring, I never used it before and I anchored items the way I consulted in the manual, is that right? As I only made two changes to the codes for ability estimates, one for recoding "not administered" as wrong, 0, the other is anchoring "item parameter estimates", so maybe I need a input file for the item parameters I got from previous run, then how?

Thank a lot. Fan

Mike.Linacre:

You are making progress, Fan.

1. Any code in the data that is not valid for your Model= is a missing data code.

Your model is

Model = ?,?,D

"D" expects the data to be 0 and 1. Anything else such as S or R or 2 is a missing data code.

2. Activate the Rating scale= in the Model= specification:

Model = ?,?,recoded ; elements of the two facets interact to produce dichotomous responses

Rating scale=recoded, D ; a dichotomous scale called "recoded"

0=wrong,,,S ;S recoded to 0

*

3. The second analysis is easier if you write an Anchorfile= from the first analysis, so the first analysis is:

; Analysis 1: estimating item parameters

; item parameter calibrations

Output = SKTOUT1.txt

Anchorfile=SKTOUT1.anc.txt

Title = AP Biology scientific knowledge for teaching Pilot items ;

;Arrange = A

Facets = 2 ; two facets: examinees and items

Positive = 1 ; for facet 1, examinees, higher score = higher measure

Noncenter = 1 ; only facet 1, examinees, does not have mean measure set to zero

Pt-biserial = Yes ; report the point-biserial correlation

Vertical=1*,1A, 2A ; show examinee by distribution, label,items by label

Model = ?,?,recoded ; elements of the two facets interact to produce dichotomous responses

Rating scale=recoded, D ; a dichotomous scale called "recoded"

0=wrong,,,S ;S recoded to 0

*

Labels =

1,Examinees ; examinees are facet 1

1-196

*

2,items

1-63

*

Data=

1,1-63,0,1,1,1,1,1,1,0,1,1,0,1,0,1,0,1,1,1,1,1,1,1,1,1,1,0,1,0,0,1,0,0,1,0,1,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

2,1-63,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,1,1,1,1,1,1,1,1,S,S,S,S,S,S,S,S,S,S,S,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

3,1-63,0,0,1,1,0,0,1,0,1,1,1,1,0,1,1,1,1,1,1,1,1,1,1,1,1,0,1,0,0,1,1,1,1,1,1,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

4,1-63,0,1,1,0,0,0,1,1,1,1,1,1,1,1,0,1,1,1,1,1,1,1,1,1,1,0,1,0,0,1,1,1,0,0,1,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

5,1-63,0,1,0,0,0,0,0,0,0,0,1,0,0,1,0,1,1,1,1,1,1,1,1,1,1,0,0,1,0,0,0,1,1,1,1,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

6,1-63,0,0,1,0,1,1,1,0,1,1,1,1,0,0,0,1,1,1,1,1,1,1,1,1,1,0,1,1,0,0,0,0,0,0,1,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

7,1-63,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,1,1,1,1,1,1,1,0,S,S,S,S,S,S,S,S,S,S,S,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

8,1-63,0,0,1,1,0,0,1,0,1,1,1,1,0,1,1,1,1,1,1,1,1,1,1,1,1,1,0,1,0,1,0,0,1,1,1,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

9,1-63,0,1,0,1,1,1,1,0,0,1,0,1,0,1,0,0,1,1,1,1,1,1,1,1,1,1,1,0,0,0,0,0,0,0,0,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

10,1-63,1,0,1,1,0,0,1,0,1,1,0,1,1,1,0,0,1,1,1,1,1,1,1,1,1,1,0,0,0,1,0,0,0,0,0,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

11,1-63,0,0,1,1,1,0,1,0,1,1,1,1,1,1,0,1,1,1,1,1,1,1,1,1,0,1,1,0,0,1,1,1,0,1,0,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

12,1-63,0,0,1,0,0,0,1,1,0,1,0,1,0,1,1,1,1,1,1,1,1,1,1,1,1,0,1,0,1,1,1,1,1,1,1,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

13,1-63,1,1,1,1,1,0,1,0,1,1,1,1,0,1,1,1,1,1,1,1,1,1,1,1,1,0,1,0,0,1,0,0,1,1,0,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

14,1-63,0,1,1,0,1,0,1,0,1,1,1,1,0,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,0,0,1,1,0,1,1,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

15,1-63,0,0,0,0,0,0,1,0,0,1,1,1,0,1,1,0,1,1,1,1,1,1,1,1,1,0,0,1,0,1,0,0,0,1,0,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

16,1-63,0,1,1,1,0,0,1,0,1,1,0,1,1,1,0,1,1,1,1,1,1,1,1,1,1,0,1,1,1,1,1,0,0,0,1,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

.................................

Mike.Linacre:

Fan, now for the second analysis.

Edit the anchorfile output of the first analysis,

1. Remove the ,A for the Examinees

2. Recode R to wrong

; Analysis 2: estimating Examinee abilities

;Output = SKTOUT1.txt

;Anchorfile=SKTOUT1.anc.txt

Title = AP Biology scientific knowledge for teaching Pilot items ;

Facets = 2 ; two facets: examinees and items

Positive = 1 ; for facet 1, examinees, higher score = higher measure

Noncenter = 1 ; only facet 1, examinees, does not have mean measure set to zero

Pt-biserial = Yes ; report the point-biserial correlation

Vertical=1*,1A, 2A ; show examinee by distribution, label,items by label

Models =

?,?,RS1,1 ; RECODED

*

Rating (or partial credit) scale = RS1,D,G,O ; RECODED

0=wrong,1,A,S+R ; add R here

*

Labels =

1,Examinees ; remove A from here

1=1,.8224688 ; you can leave these values. They are not anchored.

2=2,-4.642145

3=3,1.244645

.....

*

2,items,A

1=1,2.492652 ; these values are anchored

2=2,.55114

3=3,-.8945987

......

*

Data=

1,1-63,0,1,1,1,1,1,1,0,1,1,0,1,0,1,0,1,1,1,1,1,1,1,1,1,1,0,1,0,0,1,0,0,1,0,1,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

2,1-63,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,1,1,1,1,1,1,1,1,S,S,S,S,S,S,S,S,S,S,S,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

3,1-63,0,0,1,1,0,0,1,0,1,1,1,1,0,1,1,1,1,1,1,1,1,1,1,1,1,0,1,0,0,1,1,1,1,1,1,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

4,1-63,0,1,1,0,0,0,1,1,1,1,1,1,1,1,0,1,1,1,1,1,1,1,1,1,1,0,1,0,0,1,1,1,0,0,1,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

5,1-63,0,1,0,0,0,0,0,0,0,0,1,0,0,1,0,1,1,1,1,1,1,1,1,1,1,0,0,1,0,0,0,1,1,1,1,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

6,1-63,0,0,1,0,1,1,1,0,1,1,1,1,0,0,0,1,1,1,1,1,1,1,1,1,1,0,1,1,0,0,0,0,0,0,1,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

7,1-63,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,S,1,1,1,1,1,1,1,0,S,S,S,S,S,S,S,S,S,S,S,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

8,1-63,0,0,1,1,0,0,1,0,1,1,1,1,0,1,1,1,1,1,1,1,1,1,1,1,1,1,0,1,0,1,0,0,1,1,1,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

9,1-63,0,1,0,1,1,1,1,0,0,1,0,1,0,1,0,0,1,1,1,1,1,1,1,1,1,1,1,0,0,0,0,0,0,0,0,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

10,1-63,1,0,1,1,0,0,1,0,1,1,0,1,1,1,0,0,1,1,1,1,1,1,1,1,1,1,0,0,0,1,0,0,0,0,0,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

11,1-63,0,0,1,1,1,0,1,0,1,1,1,1,1,1,0,1,1,1,1,1,1,1,1,1,0,1,1,0,0,1,1,1,0,1,0,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

12,1-63,0,0,1,0,0,0,1,1,0,1,0,1,0,1,1,1,1,1,1,1,1,1,1,1,1,0,1,0,1,1,1,1,1,1,1,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

13,1-63,1,1,1,1,1,0,1,0,1,1,1,1,0,1,1,1,1,1,1,1,1,1,1,1,1,0,1,0,0,1,0,0,1,1,0,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

14,1-63,0,1,1,0,1,0,1,0,1,1,1,1,0,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,0,0,1,1,0,1,1,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

15,1-63,0,0,0,0,0,0,1,0,0,1,1,1,0,1,1,0,1,1,1,1,1,1,1,1,1,0,0,1,0,1,0,0,0,1,0,0,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

16,1-63,0,1,1,1,0,0,1,0,1,1,0,1,1,1,0,1,1,1,1,1,1,1,1,1,1,0,1,1,1,1,1,0,0,0,1,1,1,1,1,1,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R,R

............................

ffwang:

Hi Mike,

Thank you very much for the detailed guidance. You are awesome!!!

I think I get the output pretty much, but I have several questions that I do not understand. Sorry for keeping bugging you. :-) This is my first time using Facets on dichotomous items. I used Facets mostly for polytomous/Likert-type items.

1, I found out that for the "not administered" item responses, whether recode it to be "-1" or not, the outputs are the same. So can I make the generalization that if I want to ignore any item response (one way to handle "not reached" item responses), just use any other code that I didn't specify in the syntax command. The logic is that if something is not specified in the syntax, Facets will ignore it. In addition, in the model summary, the code R I used to code "not administered" item response is not included in either of the two response categories (0,1). Did I do anything wrong? Should I add the code "-1=missing,,,R ; R recoded to be ignored" to recode the "not administered" item responses. "

2. I didn't quite get the model specification you recommended, particularly when I examined the output. And there is a warning too in my output:

Analysis 1:

"Check (2)? Invalid datum location: 1,41,R in line 24. Datum "R" is too big or not a positive integer, treated as missing.

Total lines in data file = 197

Total data lines = 196

Responses matched to model: ?,?,RECODED,1 = 12348

Total non-blank responses found = 12348"

Analysis 2:

"Total lines in data file = 197

Total data lines = 196

Responses matched to model: ?,?,RS1,1 = 12348

Total non-blank responses found = 12348"

As my item responses are only 0's and 1's, I do not understand why there are 1,2,3,4,8 because I didn't recode the 0's and 1's to be any number as indicated in the output.

3. for the model for analysis 2,

"Model = ?,?,RS1,1 ; recoded

Rating (or partial credit) scale=RS1, D, G, O ; G means all general, sharing the same scale

0=wrong,1, A,S+R ;SR recoded to 0"

I checked out what "G" means, but why we need the "O" here? I couldn't find it out in the manual.

In addition, why "1" is also coded to be 0?

Does "A" refer to the anchored item parameters? Why we want to recode them to be 0?

4. I checked out the reliability estimates and I expected the reliability estimates should be the same for items for both analysis, but they are different. I understand the reliability estimates as index of separation and I thought the separation indices should be the same in both analysis because they are the same items and with the same test takers.

E.g.,

for 1st analysis, reliability for items = 0.90

for 2nd analysis, reliability for items = 0.83

for 1st analysis, reliability for examinees = 0.80

for 2nd analysis, reliability for examinees = 0.87

5. A side question. To interpret fit, we are mostly worried about InFit/OutFit > 1.2. If all the codes I used are correct, it seems to me most of the items and persons are not fitting well. Are there any other fit statistics that I can use in Facets to help interpret the results? How should I interpret the item discrimination for each item?

Thank you very much!

Mike.Linacre:

Fan, please look through the Facets tutorials at www.winsteps.com/tutorials.htm - also there is a huge amount of material in the Facets Help file and in the books about Facets.

1. All invalid responses such as -1 and R are ignored by Facets. Recoding R into -1 is recoding one invalid response to become another.

2. "Check (2)? Invalid datum location:

Facets alerts you about invalid data values in case they are data-entry errors. Invalid data are ignored.

3. Total non-blank responses found = 12348" = twelve thousand three hundred and forty-eight responses in the data file.

O = Ordinal (the default)

4. Different data analyzed = different reliabilities.

5. 1.2 is an exceedingly tight fit. See "Reasonable Mean-Square Fit Statistics" - https://www.rasch.org/rmt/rmt83b.htm -

For this data design with low control over the data, high mean-squares are expected.

ffwang: You are my life saver and thank you very much, Mike. Your responses are very helpful and the links too. I understand my questions now and laughed at myself for the "12348" because I was all worried about "responses being wrongly recoded but ignored the fact that it's actually about the counts of the responses". You are the best and Facets is the best too!!!

ffwang:

Hi Mike, I think I still have a question.

As you said the two separate analysis are good for speeded tests (or maybe tests that are potentially speeded), I was thinking if my test is not speeded, so I should not run two separate analyses, right?

My test is a common item design: 25 unique items on form A, 20 common items, and 25 unique items on form B, this is why part of the items are not administered to the examinees who took either form A or form B. So I think as long as I coded the items that were not administered correctly, I do not have to run the second analysis to estimate ability estimates, right?

Thank you.

Mike.Linacre:

Correct, Fan. The first analysis is enough for both examinees and items.

ffwang: Thank you very much! I will express my gratitude to you in front of my professor as well. Without your help, I couldn't be able to get my project work done. Thanks again. Best, Fan :-)

ffwang December 7th, 2013, 10:49am: Hi Mike, I got an error message "Error F31 in line 23: Too many responses: excess: 60". I have 63 items to calibrate, so it will not work in Facets? Can Winstep take more than 60 items? Thanks!

Mike.Linacre:

Fan, this error message is for a data-entry error. The number of responses in the data line does not match the elements specified in the data line.

Facets can accept a huge number of responses in a data line, probably more than 32,000, but they must match the range of elements in the data line.

ffwang: Thank you Mike. I did forget something. :-)

bmallinc December 1st, 2013, 8:14pm:

Greetings WINSTEPS users.

I have a question regarding how to show two overlapping test information functions on the same plot. I have a scale with ten items selected using Rasch criteria from an item pool of 25 items. Let’s call this the “IRT subscale”. I have identified the 10 items with highest factor loadings that would have been selected using CTT methods. There are six items in common for the IRT and CCT subscales, and four items unique to each scale. I have entered the 14 combined items in WINSTEPS. I would like to produce two test information plots on the same x-axis constructed from the 14 items, one plot for items 1-10, and a second plot for items 5-10.

Mike.Linacre:

Bmallinc, please use Excel to do this.

1) Run the 14 item analysis

2) Select items 1-10:

Specification menu box: IDELETE=+1-10

Verify that the correct items are selected: Output Tables: Table 14.

3) Output its TIF to Excel:

Output files menu: TCCFILE=, temporary to Excel

4) Reinstate all items

Specification menu box: IDELETE=

5) Select items 5-14:

Specification menu box: IDELETE=+5-14

Verify that the correct items are selected: Output Tables: Table 14.

6) Output its TIF to Excel:

Output files menu: TCCFILE=, temporary to Excel

There are now to Excel files each Excel file has a column of measures and a column of TIF.

Copy the Measure and TIF columns from one Excel file to the other

In the Excel worksheet with both TIF columns, scatter-plot each of the TIFs against its measures.

OK, bmallinc?

bmallinc:

Dr. Linacre,

Thank you so much for your reply. My mistake in the original post, the IRT items are 5-14, not 5-10. In any case, your suggestion worked very well. However, although the two TIF plots have different shapes, they seem to be centered at the same zero point -- that is, each appears to have it's own x-axis. Is there any way to plot both TFF curves on the same x-asix -- perhaps based on the full set of 14 items? The 10 IRT items were selected to provide better "bandwidth" within the 14-item set (+1.2 logits to -.90). The 10 CTT times are concentrated in the lower range of difficulty (+0.3 log its to -.90).

Mike.Linacre:

Bmallinc,

i) Please be sure you are only running one analysis: at step 1). Everything else is from the "Specification" menu box and the other menus.

ii) Be sure to copy both the TIF and ite Measure from one Excel spreadsheet to the other. (The instructions have been amended for this.)

bmallinc:

Dr. Linacre,

I apologize, but I do not see an option in the output files menu for TCCFILE, so instead I launch the graphics module, graph the TIF and then export the data to clipboard, once for items 1-10, then repeat for items 5-14. Please advise about how to access and export TCCFILE?

Thanks

Mike.Linacre:

BMallinc, TCCFILE= was added in Winsteps 3.80 (the current version), but TCC also works from the Graph dialog box.

Please verify at stages 2) and 5) that you have the correct items selected by Output Tables, Table 14.

Ramiel November 26th, 2013, 2:13pm:

Dear Experts,

I am very new to Facets in term of both using the software and its basic idea. I am using Minifac 3.71.3. My situation is that i have 100 students and 2 raters. The first 50 are rated by Rater 1, while the others are rated by Rater 2.

The error message is:

Error F36: All data eliminated as extreme. Dropped elements - first: facet 1 element 9 "9" - last: facet 1 element 99 "99"

Execution halted

I tried to consult help, but I can't find any mistake on y code.

Please help.

Ramiel Knight

ps my specification file is attached to this message.

Mike.Linacre:

Thank you for asking, Ramiel.

Your data are very thin. There is only one rating for each student and their is no overlap for the raters. In order for Rasch-model parameters to be estimated from these data, many constraints must be imposed.

In order to choose reasonable constraints, we need more information. What is the purpose of this data collection and analysis?

Ramiel:

Hi,

Thank you for your quick reply. I am dealing with a large scale marking involving 1500 students which are divided into 20 groups. Each group has 1 and only 1 lecturer and each lecturer marks only his/her students in his/her group.

Every student is assigned to complete exactly the same work, but different lecturers mark with different criteria even with the same marking scheme.

I am trying to minimise the bias/imbalance/inequality to make sure that the whole marking system is fair to the students.

Thank you very much,

Ramiel Knight

Mike.Linacre:

This is a tough situation, Ramiel. This is not really a Rasch analysis.

The best we can probably say is "the 20 groups of students are randomly equivalent" (assuming that students are assigned to the groups effectively at random).

Then we can adjust for differences in lecturer severity and central tendency:

1) compute the overall mean (M) and S.D. (SD) of all the ratings.

2) compute for each group its group mean (GM) and group S.D. (GSD).

3) standardize all the groups to the overall mean and S.D.

Standardized rating for student = ((original rating for student - GM)/GSD)*SD + M

4) Half-round the standardized rating to make the reported "fair" rating.

Gathercole:

Hi Ramiel,

It wasn't clear from your message whether these student scores will factor into the students' actual grade or if you are just experimenting with the data, but if it's the former I would caution you NOT to adjust the student scores with a thin judging plan like the one you have.

Assuming the group abilities are equal is not defensible and there could be blowback if lecturers find out their students' grades were negatively impacted by the procedure.

On the other hand if you're just doing research, feel free to experiment with the analysis however you want :) But going forward, if you want to control for differences in rater severity, each student should be rated by at least two raters.

Mike.Linacre: Ramiel, ... and also at least two ratings of each student by each rater. Instead of one holistic rating, split the rating criteria into at least two "analytic" ratings.

Ramiel:

Thank you Gathercole and Mike for your useful suggestions,

I have been busy with something else for a few days. I just added one more facet to the calculation which is "item". My analysis comprises 3 problems (items) which every students must completes all 3 problems. I think this is equivalent to Mike's suggestion "split the rating criteria into at least two "analytic" ratings"). Unfortunately, each student is still rated by only one rater. The analysis is completed without error message.