Do test items function in different ways for different groups of test-takers? Item functioning is intended to be invariant with respect to irrelevant aspects of the test-takers, such as gender, ethnicity and socio-economic status. But item functioning is expected to be altered by interventions targeted at those items, for instance, the use of calculators in arithmetic tests or the use of assistive devices on mobility items.

Differential Item Functioning (DIF) investigates the items in a test, one at a time, for signs of interactions with sample characteristics. In the widely used Mantel-Haenszel procedure (1959, www.rasch.org/memo39.pdf, RMT 1989 3:2 51-53), reference and focal groups are identified which differ in a discernible way. These groups are stratified into matching ability levels and their relative performance on each item is quantified. The ability levels are usually determined by the total scores on the test. In this way, the DIF analysis for one item is as independent as possible of the DIF analyses of the other items. But a consequence is that the overall impact of item DIF, accumulated across the whole test, is unclear.

Differential Test Functioning (DTF) compares the functioning of sets of items. Wright and Stone (1979, p. 93) compare the difficulty measures of 14 items obtained from two separate analyses. This technique has been extended to separate analyses of the test responses by reference and focal groups. The effect of separate analyses is that two separate item hierarchies are defined, and the measures of the two groups are obtained in the context of their own hierarchies.

| DTF: QUALEFFO vs. OQLQ |

|

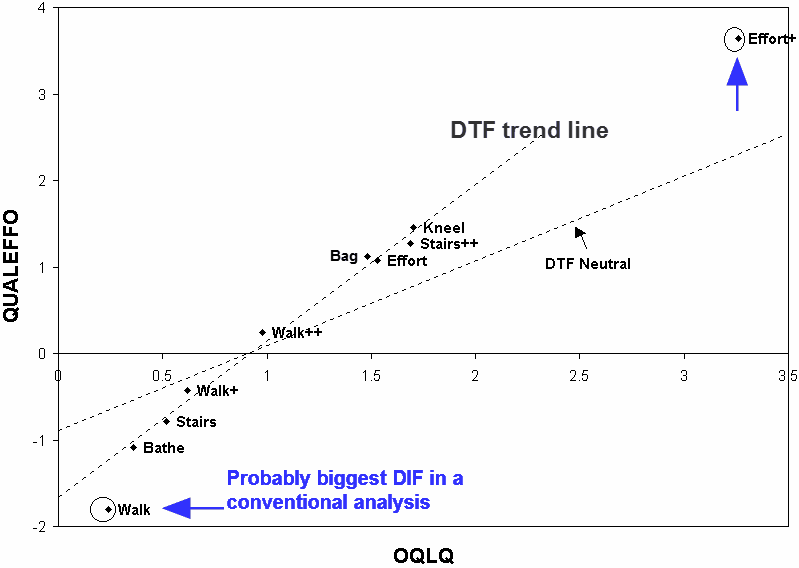

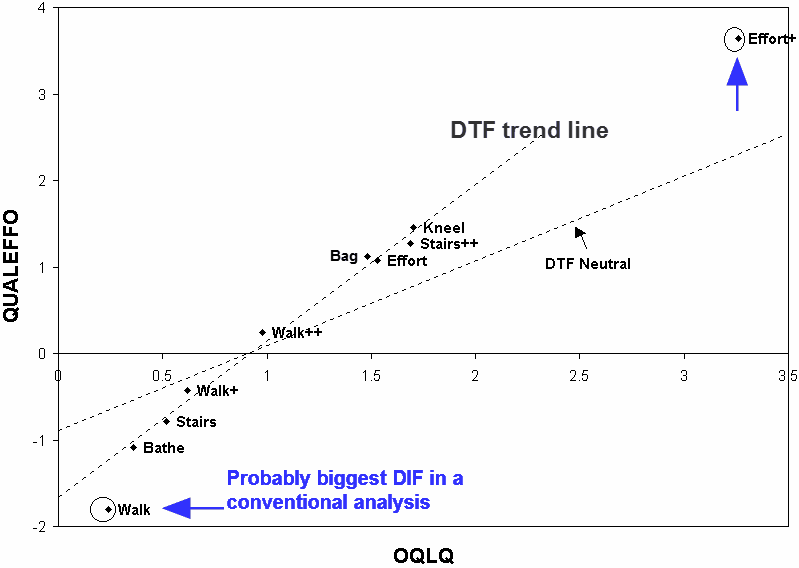

Badia, Prieto et al. (2002) provide a good example of Differential Test Functioning. Two instruments, OQLQ and QUALEFFO, were assigned to randomly equivalent groups of patients. Then the difficulty measures of the common items (originally from the SF-36) were cross-plotted. The Figure shows the results (with abbreviated item labels).

What has happened? The overall item difficulty order has been maintained, but the relationship between the difficulties is QUALEFFO = -1.7 + 1.8 OQLQ. There are also two somewhat off-diagonal items. "Walk" and "Effort+", which are easier for the QUALEFFO sample.

An overall difficulty shift is expected. The independent analyses of the two instruments result in each having its own zero-difficulty point set at the mean difficulty of its own items. The common items are the harder items on the OQLQ, but span the difficulty range on the QUALEFFO. This produces the difficulty shift of 1.7 logits.

The slope is explained in a different way. The paper reports the

mean-square fit statistics for the common items. Their mean-squares

on the QUALEFFO distribute around the expected 1.0, with an average

of 1.03, but their mean-squares on the OQLQ are in the range 0.5 to

1.0, with an average of 0.65. This implies that other items in the

OQLQ, such as "cut nails", "care for plants", "buy clothes", are

less predictable, and so force the more general SF-36 items to

overfit. If the common items were calibrated independently of the

other items in the OQLQ, their own logit range would be

approximately

1/(average mean-square) = 1 / 0.65 = 1.5 times wider,

see (Using Rasch Fit statistics to Rescale External Numbers or Measures into Rasch Anchor Values

). This would roughly match

the 1.8 times wider observed in the QUALEFFO.

The two circled off-diagonal item, "Walk" and "Effort+" are relatively easier for the QUALEFFO sample, This may be explained by sample differences. Despite the intention of having randomly equivalent samples, the paper's demographic Table reports the QUALEFFO sample to have better general health, more vitality and better physical functioning than the OQLQ. Even though each of these items has a standard error on each instrument, it is not possible to make precise DIF tests because of the changes of scale and uncontrolled interactions with items unique to each instrument.

Further investigation of "Walk" and "Effort+" requires a definitive examination of Differential Item Functioning across the two samples. A joint analysis of only the common items would remove the distorting effects of the items unique to each instrument. Further, the analyses would then share the same logit metric. The interactions between sample differences and item difficulties could then be precisely determined.

John M. Linacre

Badia X, Prieto L, Roset M, Díez-Pírez A, Herdman M (2002) Development of a short osteoporosis quality of life questionnaire by equating items from two existing instruments. Journal of Clinical Epidemiology, 55, 32-40.

Differential Item and Test Functioning (DIF & DTF). Badia X, Prieto L, Linacre JM. … Rasch Measurement Transactions, 2002, 16:3 p.889

| Forum | Rasch Measurement Forum to discuss any Rasch-related topic |

Go to Top of Page

Go to index of all Rasch Measurement Transactions

AERA members: Join the Rasch Measurement SIG and receive the printed version of RMT

Some back issues of RMT are available as bound volumes

Subscribe to Journal of Applied Measurement

Go to Institute for Objective Measurement Home Page. The Rasch Measurement SIG (AERA) thanks the Institute for Objective Measurement for inviting the publication of Rasch Measurement Transactions on the Institute's website, www.rasch.org.

| Coming Rasch-related Events | |

|---|---|

| Jan. 16 - Feb. 13, 2025, Fri.-Fri. | On-line workshop: Rasch Measurement - Core Topics (E. Smith, Winsteps), www.statistics.com |

| Apr. 8 - Apr. 11, 2026, Wed.-Sat. | National Council for Measurement in Education - Los Angeles, CA, ncme.org/events/2026-annual-meeting |

| Apr. 8 - Apr. 12, 2026, Wed.-Sun. | American Educational Research Association - Los Angeles, CA, www.aera.net/AERA2026 |

| May. 15 - June 12, 2026, Fri.-Fri. | On-line workshop: Rasch Measurement - Core Topics (E. Smith, Winsteps), www.statistics.com |

| June 19 - July 25, 2026, Fri.-Sat. | On-line workshop: Rasch Measurement - Further Topics (E. Smith, Winsteps), www.statistics.com |

The URL of this page is www.rasch.org/rmt/rmt163g.htm

Website: www.rasch.org/rmt/contents.htm